Spark setup

Last updated on 27th January 2026

This page explains how to setup and use the Simudyne SDK to run on top of Spark, for distributing your models.

The first requirement is to install Spark , running standalone or on top of Hadoop YARN. The required version is Spark 2.2.

We recommend using the version of Spark running on Cloudera products : https://www.cloudera.com/products/open-source/apache-hadoop/apache-spark.html

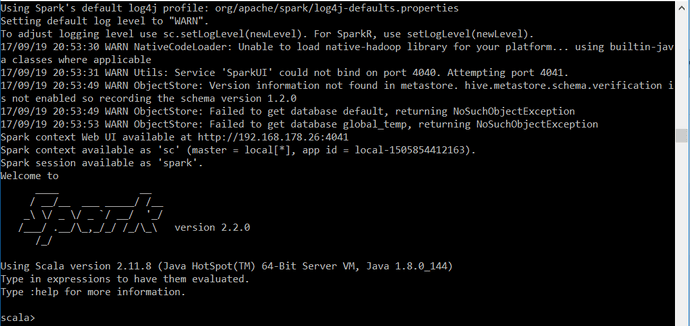

Once Spark is installed you can check it is running correctly lauching the Spark-shell in a terminal :

./bin/spark-shellYou need to identify your Spark master URL which points towards the master node of your cluster. Above the master URL indicates Spark is running locally (master = local[*]). The master URL should generally be a spark://host:port type of URL, on a standalone cluster or yarn if you use Hadoop YARN.

You can then start your project from one of the quickstart projects, preconfigured for Spark :

- Maven : https://github.com/simudyne/simudyne-maven-java-spark

- SBT : https://github.com/simudyne/simudyne-sbt-java-spark

Clone or download the repository and setup your credentials like a standard simudyne project.

Uncomment the following line in your properties file to enable Simudyne SDK using Spark as the backend implementation of the SDK :

simudyneSDK.properties

### CORE-ABM-SPARK ###

core-abm.backend-implementation=simudyne.core.graph.spark.SparkGraphBackendYou have then two possibilities to configure Spark properties :

- modify

core-abm-sparkproperties in thesimudyneSDK.propertiesfile - set configuration parameters as command parameters when using

spark-submitcommand

You must be aware that a property set in the simudyneSDK.properties file will override the one passed to the spark-submit.

To run your model, you will need to build a fatJar file which will carry your model, the Simudyne SDKand all the necessary dependencies. You will then need to upload it to the master node of your cluster where you can submit your Spark jobs.

Here is the command to build your fatJar file :

Maven

mvn -s settings.xml compile packageSBT

sbt assemblyYou can then upload this jar file to your master node via SSH and then submit your job with :

spark2-submit --class Main --master <sparkMasterURL> --deploy-mode client --num-executors 30 --executor-cores 5 --executor-memory 30G --conf "spark.executor.extraJavaOptions=-XX:+UseG1GC" --files simudyneSDK.properties name-of-the-fat-jar.jarYou should set --num-executors, --executor-cores, --executor-memory parameters according to your own cluster resources. Useful resource : http://blog.cloudera.com/blog/2015/03/how-to-tune-your-apache-spark-jobs-part-2/