Distributed Requirements

Last updated on 27th January 2026

Running on Hadoop

The Simudyne SDK only requires that you have a Hadoop based environment with HDFS, Yarn, and Spark 3.2+ installed onto your cluster. Optionally if you wish to use Parquet with Hive that will also need to be installed see here on how to configure.

Because of the usage of the driver/worker nodes Java 8+ is required on all nodes, however most recent versions of Hadoop installs should satisfy this.

Our recommended setup is to make usage of Cloudera CDP for connecting to your existing or Azure/AWS environments. A Data Engineering template with inclusion of Spark is recommended for usage with Simudyne's SDK.

However, as long as you have a valid Hadoop cluster with Spark, Spark on Yarn, and HDFS you should be able to work with the SDK. The core requirement is being able to submit a spark job that includes a singularly packaged jar file of your simulation along with any configuration or data lakes included.

Setting up Spark

The first requirement is to install Spark , running standalone or on top of Hadoop YARN. **The required version is Spark 3.2+ **

We recommend using the version of Spark running on Cloudera products : https://www.cloudera.com/products/open-source/apache-hadoop/apache-spark.html

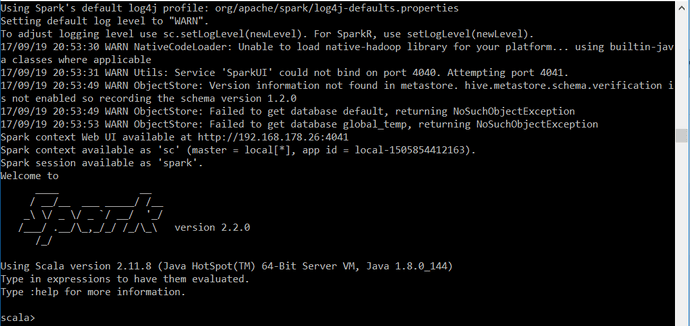

Once Spark is installed you can check it is running correctly launching the Spark-shell in a terminal :

./bin/spark-shellYou need to identify your Spark master URL which points towards the master node of your cluster. Above, the master URL indicates Spark is running locally (master = local[*]).

The master URL should generally be a spark://host:port type of URL on a standalone cluster.